Docker has skyrocketed in popularity over the years. Postgres (also known as PostgreSQL) is an open-source, standards-compliant, object-relational database that has been developed for over 30 years. This official feature matrix shows the richness of features that Postgres has.

Running Postgres with Docker and docker-compose makes it very easy to run and maintain, especially in a development environment. In this post, we’ll look at how to run and use Postgres with Docker and Docker compose step by step keeping things simple and easy. Start!

Table of Contents

#Por what to use Postgres with docker for local development Postgres prerequisites with Docker

- PostgreSQL with docker-compose

- Docker to an existing node.js Conclusion

- Why use Postgres with docker for local

Add Postgres with

development

#

There are many good reasons to use any database like Postgres with Docker for local development, below are some good reasons:

- Using multiple versions of PostgreSQL depending on the project or any other need is very easy

- In general, with Docker, if it runs on your machine, it will run for your friend, in a staging environment and in a production environment given that version compatibility is maintained.

- When a new team member joins, the new member can get started in hours, it doesn’t take days to be productive.

.

You can read more about this on why to use docker. In the next section, we’ll look at some good things before diving into the commands for running Postgres with Docker.

Prerequisites #

Before we dive into some CLI commands and a bit of code, below are some prerequisites that are best to have:

- Basic knowledge of Docker will come in handy, such as executing commands like docker run, execute, etc. For this tutorial, Docker version 20.10.10 will be used on a Mac.

- Any prior understanding of Docker composition would be useful but not necessary. For this guide, we’ll use version 1.29.1 of docker-compose on a Mac.

- An intermediate understanding of how relational databases work, especially PostgreSQL, would be very beneficial.

- We will be using an existing application/API with Node.js and Postgres replacing a remote Postgres with a local one running with Docker and Docker compose, so it would be advisable to read the previous post about it.

Given the prerequisites that have been mentioned, we can move on to the next section where we will run some docker commands. Prepare those itchy fingers now.

Postgres with Docker #

For this post, we will use the official Postgres docker alpine image from DockerHub. We will be using the latest PostgreSQL version 14.1.

The default version

of the Postgres docker image is 130 MB, while the alpine version for the same version is 78 MB, which is much smaller.

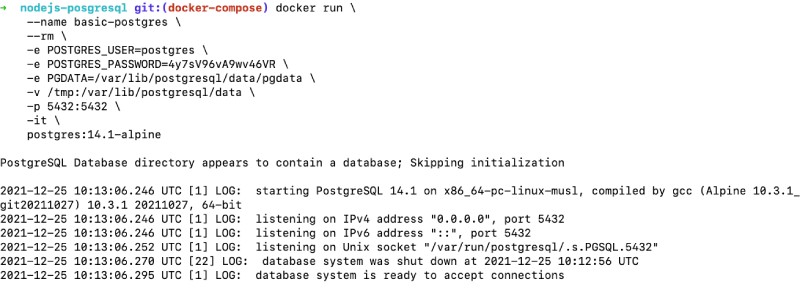

To simply run the container using the Postgres 14.1 alpine image we can run the following command:docker run -name basic-postgres -rm -e POSTGRES_USER=postgres -e POSTGRES_PASSWORD=4y7sV96vA9wv46VR -e PGDATA=/var/lib/postgresql/data/pgdata -v /tmp:/var/lib/postgresql/data -p 5432:5432 -it

postgres:14.1-alpine

Let’s evaluate what the above command does. Try to run a container from the posgres:14.1-alpine image that will be pulled from Dockerhub by default if it doesn’t exist.

First, the

name basic-posgres is given to the running container, and -rm will clean the container and delete the file system when the container exits. Some environment variables have been added to make things easier.

The last 3 parameters are interesting, -v adds a volume to store data, for this example, it has been assigned to /tmp so all data will be lost when the machine restarts. Next, we use the -p parameter to map host port 5432 to container port 5432.

The last parameter of the command is -it to have the tty available. When

we execute the command we will see an output like the following: The container is

The container is

working and ready to accept connections, if we execute the following command we can go inside the container and execute the psql command to see the postgres database that is the same as the username provided in the event variable.

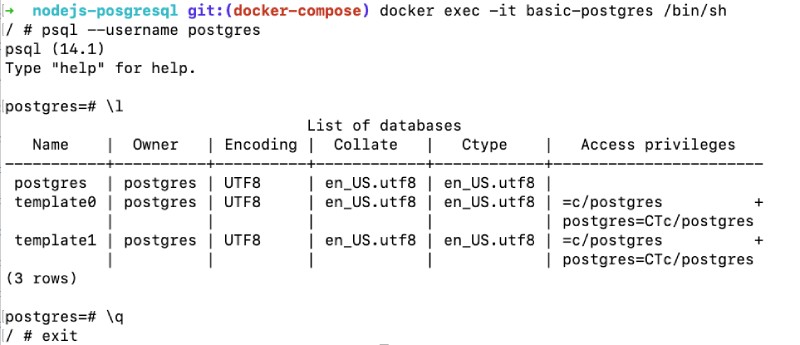

docker exec -it basic-postgres /bin/sh

After being inside the container we can run psql -username postgres to access the Postgres CLI. To

list the databases we can run \l inside the psql CLI to list the databases and see the Postgres database as seen below:

As seen in the previous image \q will exit the psql CLI and exit the shell of the container that we have executed exit.

If we go back to the running container and press Ctrl + C, it will stop the container and clean it up, since we have used the -rm parameter when we run it.

This is a way to run Postgres with docker but as we have seen it is not easy to remember the 10 liner command and all the necessary parameters.

In addition, we have not specified any link between the database and our application. This is where the docker-compose file and docker-compose command come in handy as seen in the next section.

PostgreSQL with docker-compose #Para run the same Postgres 14.1-alpine with docker-compose we will create a docker-compose-pg-only.yml file with the following contents:version: ‘3.8’services: db: image: postgres:

14.1-alpine

restart: always environment: – POSTGRES_USER=postgres – POSTGRES_PASSWORD=postgres ports: – ‘5432:5432’ volumes: – db:/var/lib/postgresql/datavolumes: db: driver: local The

docker-compose

file

has the following you should consider:

Use docker-compose version

- 3.8 file

- Next, we define db as a service, each service will be equivalent to a new docker run command We are asking docker-compose to

- make the service a Postgres version 14.1 alpine image that will always restart if the container stops automatically.

- Accordingly, we defined two environment variables to send the Postgres user and password. Note that because the database is not shipped by default for the official image, you will use the user name as the database name.

- We then map host/machine port 5432 with container port 5432 as Postgres runs on that container port.

- After that, we ask docker-compose to manage the volume in a name called db that is added to be a local driver. Therefore, when the container is restarted, the data will be available from the Docker-managed volume. To see the contents of the volume we can run docker volume ls and inspect the volume attached to our Postgres container.

After that explanation, to start the containers we will run docker-compose –

f docker-compose-pg-only.yml above, which will show output like the following:<img

src=”https://geshan.com.np/images/docker-postgres/04docker-compose-up-postgres-only.jpg” alt=”docker-compose up output for Postgres container” />

So the Postgres database is running and this time it was a one-line command, not a long command for it to run since all parameters The need was in the docker-compose file.

At this point, the Postgres

in the container will behave similarly to a local instance of Postgres, as we have mapped port 5432 to local port 5432.

Next, we’ll see how we modify the docker-compose file to fit an existing project.

Adding Postgres with Docker to an existing

node.js project #

Since we’ve seen PostgreSQL run with docker-compose, we’ll now integrate it with an API project .js running node. A complete step-by-step tutorial of how to build this stock API project with Node.js and Postgres is available for your reference. For this guide we will add a docker-compose.yml file with the following content

:version: ‘3.8’services: db: image: postgres:14.1-alpine restart: always environment: – POSTGRES_USER=postgres – POSTGRES_PASSWORD=postgres ports: – ‘5432:5432’ volumes: – db:/var/lib/postgresql/data – ./db/init.sql:/docker-entrypoint-initdb.d/create_tables.sql api: container_name: quotes-api build: context: ./ target: production image: quotes-api depends_on: – DB ports: – 3000:3000 Environment: NODE_ENV: Production DB_HOST: DB DB_PORT: 5432 DB_USER: Postgres DB_PASSWORD: Postgres DB_NAME: Postgres Links: – Database volumes: – ‘./:/src’volumes: db: driver: local This file

looks somewhat similar to the docker-compose file above, but below are the main differences

.

The first is, here we use -./db/init.sql:/docker-entrypoint-initdb.d/create_tables.sql on line number 13. We are doing this to create the quote table and populate the data as seen in this SQL file. Here’s how to run initialization scripts for Postgres with docker. This is an idempotent operation, if the data directory fills the init file.sql it will not run again to prevent data override. If we want to force the deletion of the data, we will have to delete the volume of the docker after an inspection of the volume of the docker.

Next, we define a new service called api that builds the local Dockerfile with target production and names it quotes-api. After that, it has a depends_on definition in the db container which is our Postgres container.

It then maps host port 3000

to exposed container port 3000 where the Node.js Express API server runs. In the environment variables, define db as the host that maps to the previous Postgres container and uses the same credentials provided in the previous definition. Links to the Postgres container defined before the API service.

Finally, map all local files to the container’s /src so that things run with Node.js.

This file is also available on GitHub for your reference.

When we run docker-compose at the root of this project, we can see the following output:<img src="https://geshan.com.np/images/docker-postgres/05docker-compose-up-postgres-and-nodejs.jpg" alt="

docker-compose

up output for Postgres and Node.js Express” />

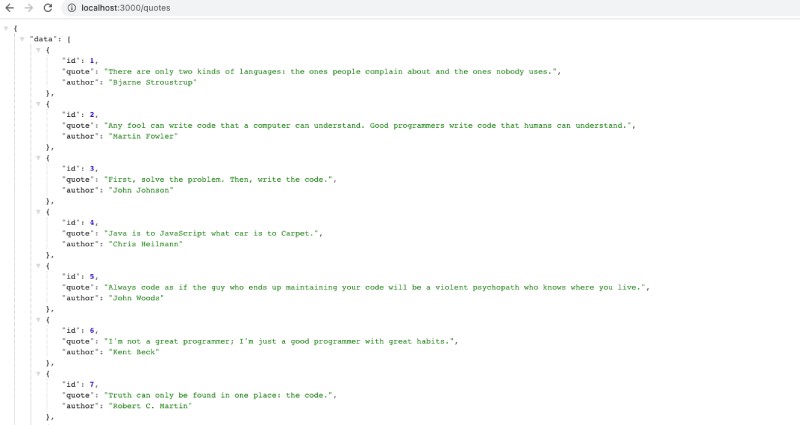

As the web server is mapped to local port 3000, we can see the output as shown below by pressing http://locahost:3000/quotes in any browser:

Hooray! Our Node.js Express API for quotes is communicating correctly with the local Postgresql database as expected. If you want to quickly test the docker-compose up experience, clone this Github repository and give it a try.

If Node.js with MySQL is your flavor of choice, read this guide as well. You can also try Node.js with SQLite if that works best for you.

Conclusion #

In this post, we witnessed how to run Postgres with docker only and then added the docker-compose goodness to make things easier. After that, we added Postgres to an existing node API .js to make local development much easier.

I hope it will be easier to understand how to run Postgres with Docker quickly and easily.