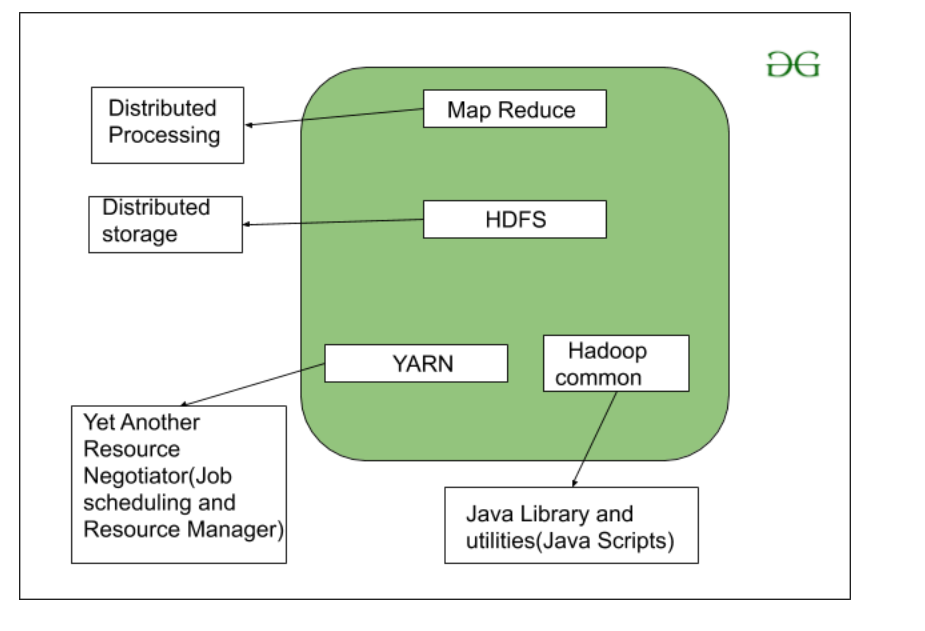

As we all know, Hadoop is a framework written in Java that uses a large cluster of commodity hardware to hold and store large data. Hadoop works on the MapReduce programming algorithm that was introduced by Google. Today, many big brand companies are using Hadoop in their organization to deal with big data, for example. Facebook, Yahoo, Netflix, eBay, etc. The Hadoop architecture consists mainly of 4 components.

- MapReduce

- HDFS

- YARN(Yet Another Resource Negotiator)

(Hadoop Distributed File System)

Common Utilities or Hadoop Common

Let’s understand the role of each of these components in detail

. 1.

MapReduce

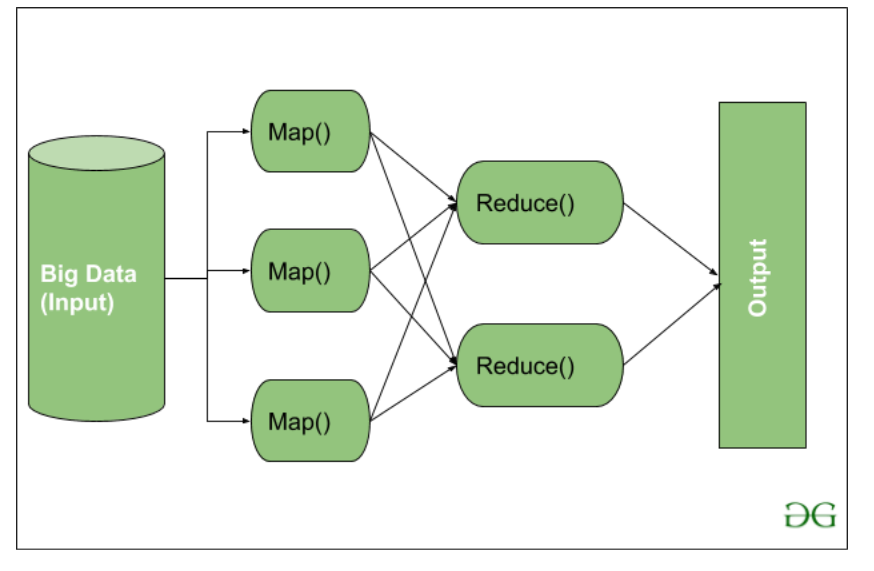

MapReduce is nothing more than an algorithm or data structure that is based on the YARN framework. The main feature of MapReduce is to perform parallel distributed processing on a Hadoop cluster, which makes Hadoop run so fast. When it comes to Big Data, serial processing is no longer of any use. MapReduce mainly has 2 tasks that are divided into phases:

in the first phase, Map is used

and in the next phase Reduce is used.

Here, we can see that the input is provided to the

Map() function, then its output is used as input to the Reduce function and after that, we receive our final output. Let’s understand what Map() and Reduce() do.

As we can see that an input to Map() is provided, now that we are using Big Data. The input is a set of data. The Map() function here divides these DataBlocks into tuples that are nothing more than a key-value pair. These key-value pairs are now sent as input to Reduce(). The Reduce() function then combines this broken tuple or key-value pair based on its key value and set of Tuple shapes, and performs some operation such as sorting, sum-type work, etc. which is then sent to the final output node. Finally, you get the output.

Data processing is always done at Reducer depending on the business requirements of that industry. This is how First Map() and then Reduce are used one by one.

Let’s understand the map task

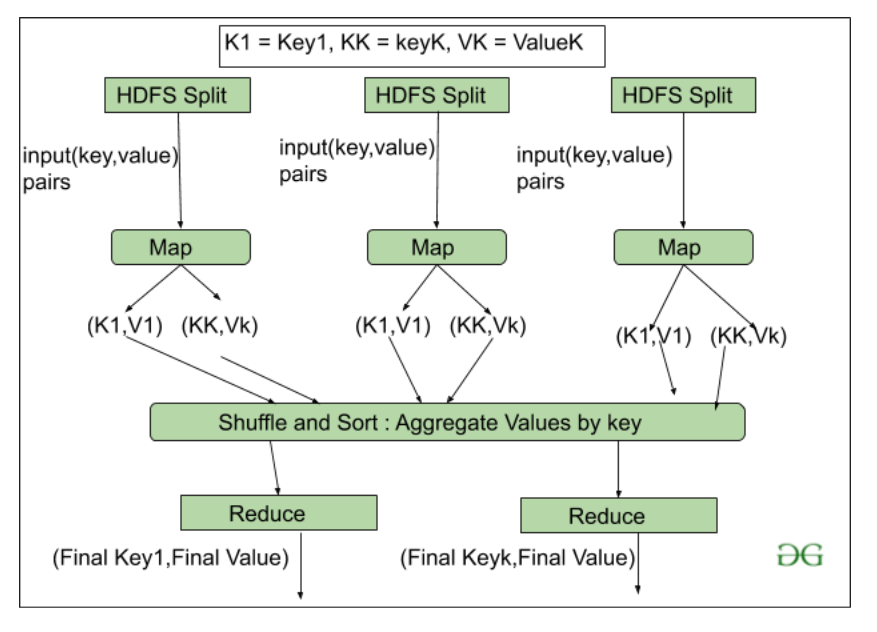

and the task of reducing in detail. Map task: RecordReader The purpose of recordreader is to break records.

- It is responsible for providing key-value pairs in a Map() function. The key is actually your location information and the value is the data associated with it.

- Map: A map is nothing more than a user-defined function whose job is to process the tuples obtained from the record reader. The Map() function does not generate any key-value pairs or generates multiple pairs of these tuples.

- Combiner: Combiner is used to group data in the map workflow. It is similar to a Local reducer. The intermediate key value that is generated on the map is combined with the help of this combiner. The use of a combiner is not necessary, as it is optional.

- Partitioning: Partitional is responsible for obtaining key-value pairs generated in the phases of the allocator. The partitioner generates the fragments corresponding to each reducer. The hash code of each key is also obtained by this partition. Then the partitioner performs its module (Hashcode) with the number of reducers (key.hashcode()%(number of reducers)).

Reduce

the task of shuffling and sorting: The reducer task begins with this step, the process in which the mapper generates the intermediate key value and transfers it to the reducer task

- is known as shuffling. Using the randomplay process, the system can sort the data using its key value.

Once some of the mapping tasks are done, shuffling begins, that’s why it’s a faster process and doesn’t wait for the task done by Mapper to complete.

- Reduce: The main function or task of Reduce is to collect the tuple generated from the map and then perform some kind of classification and aggregation process in those key-value depending on its key element.

- OutputFormat: Once all operations are performed, key-value pairs are written to the file with the help of the recorder, each record on a new line, and the key and value separated by spaces.

2. HDFS

HDFS

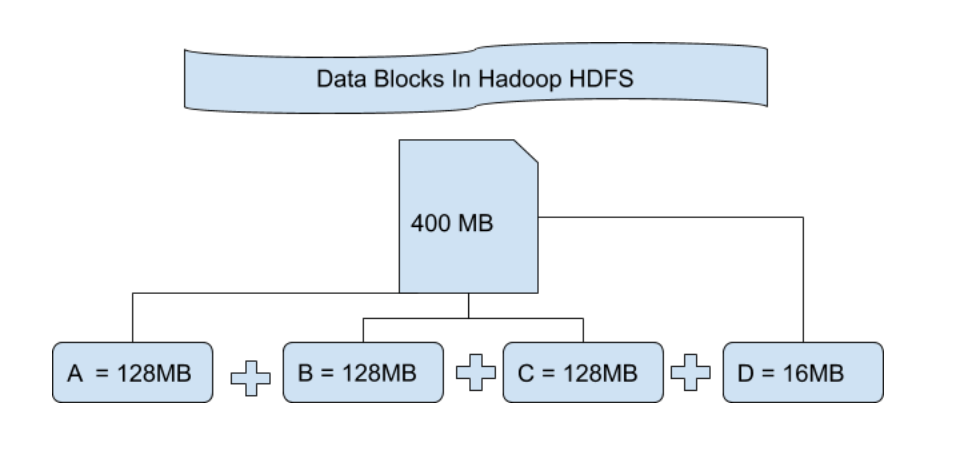

(Hadoop Distributed File System) is used for the storage permission. It is primarily designed to work on basic hardware devices (low-cost devices), working on a distributed file system design. HDFS is designed in such a way that it believes more in storing the data in a large part of blocks rather than storing small blocks of data.

HDFS on Hadoop

provides fault tolerance and high availability to the storage layer and other devices in that Hadoop cluster. Data storage nodes in HDFS.

NameNode(Master)DataNode(Slave)NameNode:NameNode functions as a Master in a Hadoop cluster that guides

- Datanode(

Slaves). Namenode is mainly used to store metadata, i.e. data about data. Metadata can be the transaction logs that track user activity in a Hadoop cluster.

Metadata can also be file name, size, and information about the location (block number, block identifiers) of Datanode that Namenode stores to find the nearest DataNode for faster communication. Namenode instructs DataNodes with the operation such as delete, create, replicate, etc.

DataNode: DataNodes works like a slave DataNodes are mainly used to store the data in a Hadoop cluster, the number of DataNodes can be from 1 to 500 or even more than that. The higher the number of DataNodes, the more data the Hadoop cluster can store. Therefore, it is recommended that the DataNode has a high storage capacity to store a large number of file blocks.

Hadoop High-Level Architecture <img src="https://media.geeksforgeeks.org/wp-content/uploads/20200621121240/Namenode-and-Datanode.png" alt="

Hadoop

High-Level Architecture

” />File block

in HDFS

: Data in HDFS is always stored in terms of blocks. Therefore, the single block of data is divided into multiple blocks of size 128MB, which is the default and you can also change it manually.

Let’s understand this concept of breaking down files into blocks with an example. Suppose you have uploaded a 400MB file to your HDFS, what happens is that this file was divided into blocks of 128MB + 128MB + 128MB + 16MB = 400MB in size. It means that 4 blocks of 128 MB each are created, except the last one. Hadoop doesn’t know or care what data is stored in these blocks, so it considers the final file blocks as a partial record, as it has no idea about it. In the Linux file system, the size of a file block is about 4 KB, which is much smaller than the default size of file blocks in the Hadoop file system. As we all know, Hadoop is mainly configured to store the large data that is in petabytes, this is what makes the Hadoop file system different from other file systems as it can be scaled, nowadays file blocks from 128MB to 256MB are considered in Hadoop.

Replication to HDFS Replication ensures data availability. Replication is making a copy of something and the number of times you make a copy of that particular thing can be expressed as its replication factor. As we have seen in File blocks that the HDFS stores the data in the form of several blocks at the same time, Hadoop is also configured to make a copy of those blocks of files.

By default, the replication factor for Hadoop is set to 3, which can be configured, which means you

can change it manually based on your requirements, as in the example above, we have created 4 file blocks, which means 3 replicas or copies of each file block are made, which means a total of 4×3 = 12 blocks are made for backup purposes.

This is because to run Hadoop we are using basic hardware (inexpensive system hardware) that can crash at any time. We are not using the supercomputer for our Hadoop setup. That’s why we need such a feature in HDFS that can make copies of those file blocks for backup purposes, this is known as fault tolerance.

Now, one

thing we should also note that after making so many replicas of our file blocks, we are wasting a lot of our storage, but for the organization of big brands, data is very important than storage, so no one cares about this extra storage. You can configure the replication factor in the hdfs-site.xml file.

Rack recognition The rack is nothing more than the physical collection of nodes in our Hadoop cluster (maybe 30 to 40). A large Hadoop cluster consists of so many racks. With the help of this Racks information, Namenode chooses the nearest Datanode to achieve maximum performance while performing read/write information that reduces network traffic.

HDFS architecture

<img src="https://media.geeksforgeeks.org/wp-content/uploads/20200621125932/HDFS-Architecture1.png" alt="HDFS architecture

” />

3. YARN (Yet Another Resource Negotiator)YARN

is a framework that MapReduce works on. YARN performs 2 operations which are job scheduling and resource management. The purpose of job scheduling is to divide a large task into small jobs so that each job can be assigned to multiple slaves in a Hadoop cluster and processing can be maximized. The Job Scheduler also keeps track of what work is important, which work has the highest priority, dependencies between jobs, and all other information such as job time, etc. And using Resource Manager is to manage all the resources that are available to run a Hadoop cluster.

YARN

- Multi-Tenancy

- Scalability Support for

Features

cluster utilization

4. Hadoop

common or Common Utilities Hadoop

common or Common utilities are nothing more than our java library and java files or we can say the java scripts we need for all the other components present in a Hadoop cluster. HDFS, YARN, and MapReduce use these utilities to run the cluster. Hadoop Common verifies that the hardware failure in a Hadoop cluster is common, so the Hadoop Framework should automatically resolve it in the software.