There are several ways to install PySpark depending on your environment and use case. You can install only one PySpark package and connect to an existing cluster or install full Apache Spark (includes the PySpark package) to set up your own cluster.

In this article, I will cover step by step the installation of pyspark using pip, Anaconda (conda command), manually on Windows and Mac.

Installation ways

: Download and install manually yourself. Use Python PIP to configure PySpark

- and

- Use Anaconda to configure PySpark with all its features

- Python to run PySpark.

connect to an existing cluster.

. 1: Install python Regardless of the process you use, you must install

If you already have Python, skip this step. Check if you have Python using python -version or python3 -version from the command line.

On Windows: Download Python from Python.org and install it.

On Mac – Install python using the following command. If you do not have a preparation, install it first following https://brew.sh/.

# install Python brew

install python 2. Install

Java

PySpark uses underlying Java, therefore you need to have Java on your Windows or Mac. Since Java is a third party, you can install it using Homebrew for Mac and download and install it manually for Windows. Since Oracle Java is no longer open source, I am using OpenJDK version 11.

On Windows – Download OpenJDK from here and install it

.

On Mac: Run the following command in the terminal to

install Java. # install Java brew install [email protected]

3. Install PySpark

3.1. Download and manually

install PySpark

PySpark is a Spark library written in Python for running Python applications using the capabilities of Apache Spark. therefore, you can install PySpark with all its features by installing Apache Spark.

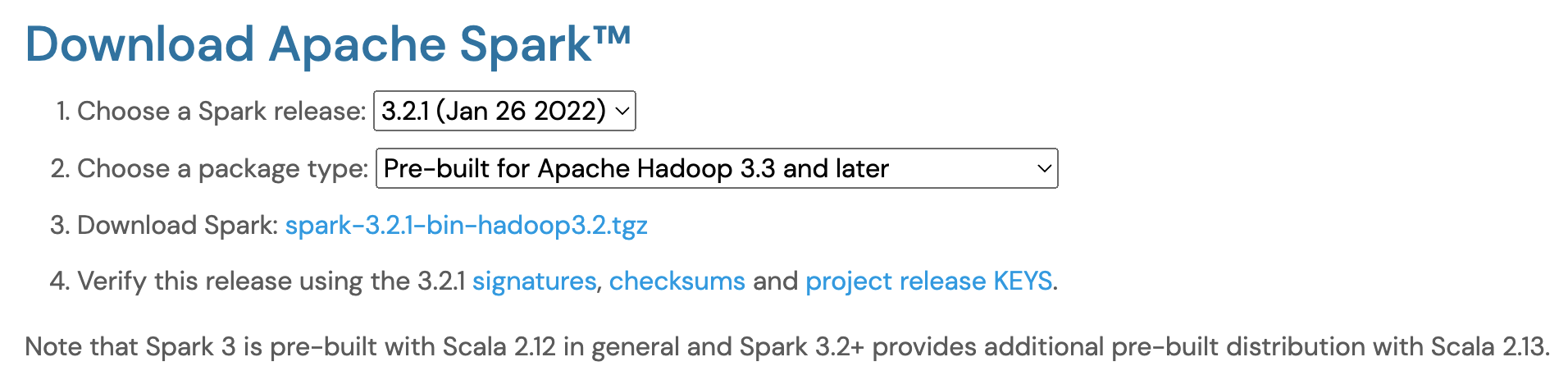

On the Apache Spark download page, select the “Download Spark (item 3)” link to download. If you want to use a different version of Spark & Hadoop, select the one you want from the drop-down menus, and the link in point 3 changes to the selected version and provides you with an updated link to download.

After downloading, unzip the binary and copy the underlying folder spark-3.2.1-bin-hadoop3.2 to /your/home/directory/ On

Windows: Unzip the binary using 7zip

.

On Mac: Run

the following command # Unzip the tar tar -xzf

spark-3.2.1-bin-hadoop3.2.tgz

file Now set the following environment variables. On Windows: Set

the following environment variables.

If you’re not sure, Google it.

SPARK_HOME = c:\your\home\directory\spark-3.2.1-bin-hadoop3.2 HADOOP_HOME = c:\your\home\directory\spark-3.2.1-bin-hadoop3.2 PATH = %PATH%;%SPARK_HOME%\bin

On Mac: Depending on your version, open .bash_profile .bashrc or .zshrc file and add the following lines. After adding reopen the session/terminal.

export SPARK_HOME = /your/home/directory/spark-3.2.1-bin-hadoop3.2 export HADOOP_HOME = /your/home/directory/spark-3.2.1-bin-hadoop3.2 export PATH = $PATH:$SPARK_HOME/bin

The next step is only required for Windows. Download the winutils.exe file from winutils and copy it to the %SPARK_HOME%\bin folder. Winutils are different for each version of Hadoop, so download the correct version of https://github.com/steveloughran/winutils

This completes the installation of Apache Spark to run PySpark on Windows

.

3.2. Installing

PySpark using pip Alternatively, you can install only one PySpark package using the pip python installer. Note that with Python

pip you can install only the PySpark package

that is used to test your jobs locally or run your jobs on an existing cluster running with Yarn, Standalone, or Mesos. It does not contain features/libraries to configure your own cluster. If you want PySpark with all its features, including starting your own cluster, install it from Anaconda or using the above approach.

Install pip on Mac and Windows: Follow the instructions below to install pip.

#Install pip https://pip.pypa.io/en/stable/installing/

For Python users, PySpark provides pip installation from PyPI. Python pip is a package manager that is used to install and uninstall third-party packages that are not part of the Python standard library. Using pip you can install/uninstall/update/downgrade any Python library that is part of the Python package index.

If you already have pip installed, update pip to the latest version before

installing PySpark. # Install pyspark from pip pip install pyspark

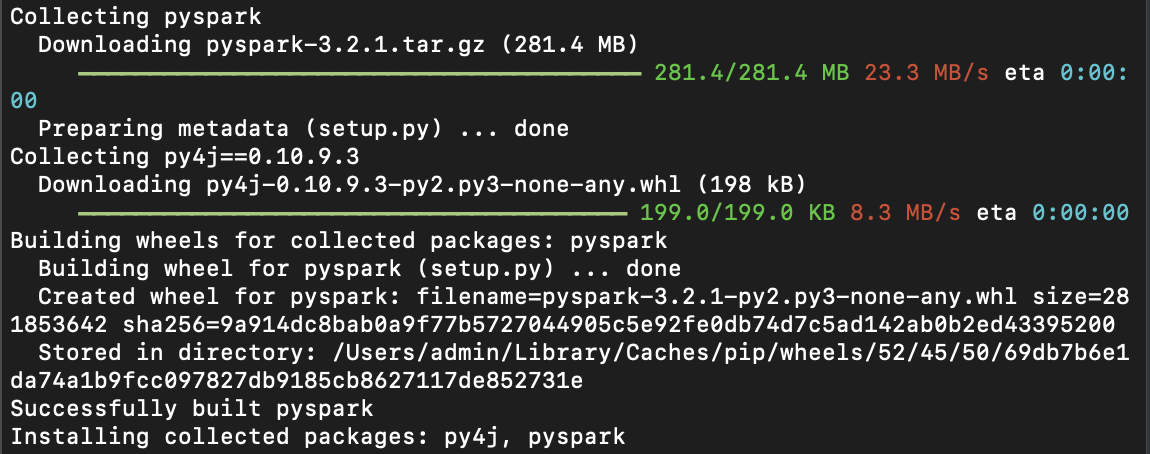

This pip command starts collecting the PySpark package and installing it. You should see something like this below on the console if you’re using Mac.

As I said earlier, this does not contain all the features of Apache Spark, therefore you cannot set up your own cluster, but use it to connect to the existing cluster to run jobs and run jobs locally

.

3.3. Using

Anaconda

Follow Install PySpark using Anaconda and run Jupyter notebook

4. Test installing

PySpark from the shell

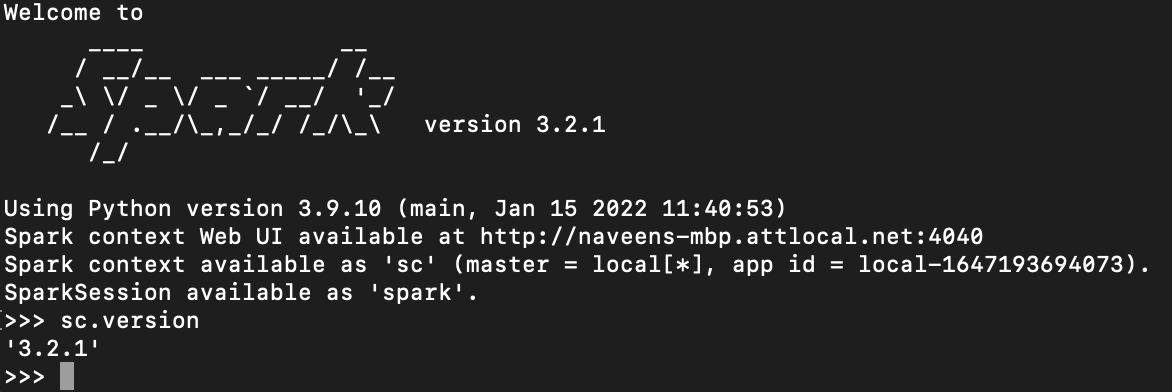

Regardless of which method you have used, once you successfully install PySpark, launch pyspark shell by entering pyspark from the command line. PySpark shell is a REPL that is used to test and learn pyspark statements.

To submit a job on the cluster, use the spark-submit command that comes with install

.

If you have any problems setting up PySpark on Mac and Windows by following the steps above, please leave me a comment. I will be happy to help you and correct the steps.

Happy learning!!

Related Articles

Using PySpark shell commands with examples Install PySpark on

- Jupyter on Mac using Homebrew

- Dynamic way to do ETL through PysparkDifference

- between spark-submit vs pyspark commands

- – What is SparkSession?

- window functions

- How to import PySpark into Python Script

How to install PySpark on Windows

? PySpark

PySpark